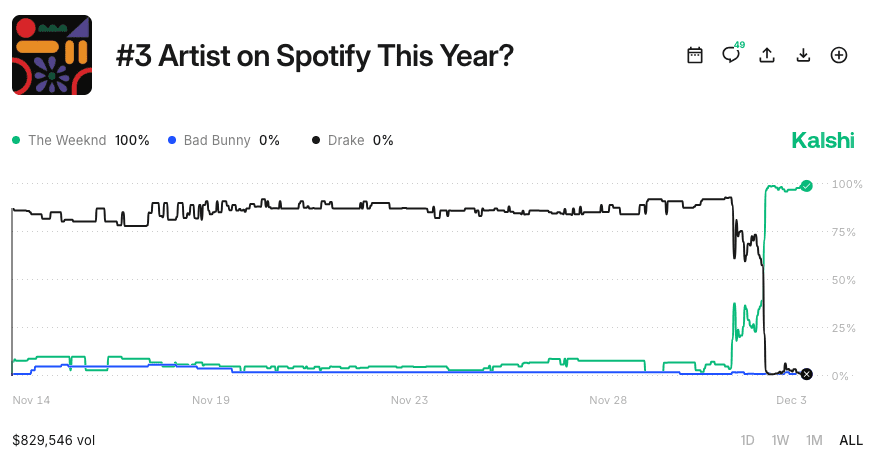

Hours before Spotify officially released its Wrapped 2025 rankings, a subset of prediction-market traders had already identified the full list of the year’s top global artists using, almost certainly, only publicly available information. Information which, in fact, contradicted the market’s high-confidence, priced-in prediction of Spotify’s top-artist rankings, expectations informed by regularly published streaming data.

What these traders discovered by scraping the Spotify Newsroom site for pre-released web artifacts was that Drake, slated by the market to finish as the #3 highest-ranked artist, was actually going to appear as #4.

It is not clear whether the traders who uncovered the leak understood why this was the case (Spotify had quietly cleaned up streaming data by removing bot-generated streams). Regardless, they acted on it. In a matter of moments, they collapsed the price of the “yes” contract from the high 90s toward zero in a market with nearly $4 million in trading volume across both Polymarket and Kalshi.

Recreationally, however, I am a trader. And as a trader, I do not want to be on the wrong side of a flip like this, nor, dear reader, do I want you to be. So let us unpack how these leaks happen, the underlying structure of the internet that allows them, and some code you can use to detect such leaks and set stop-orders in the event they occur.

How modern web infrastructure creates these leaks

In the early days of the internet, websites were coded manually and content was edited directly in the HTML or server files. Today, in response to the explosive growth of the web and the need for development teams to manage complex workflows, many organizations, including Spotify’s Newsroom, which originated this leak, use Content Management Systems (CMS). In Spotify’s case, the CMS was WordPress.

CMSs separate preparation from publication: teams upload images, PDFs, and metadata before writing or scheduling the associated post. The crucial technical detail is that uploading is often equivalent to publishing unless explicit access restrictions are configured.

To understand why a CMS allows for pre-released assets before they are visible on a site, we need to briefly discuss how modern websites expose information. Many sites, including WordPress installations, provide what is known as an API, or Application Programming Interface. In simple terms, an API is a standardized doorway that allows software to request information from a website in a structured, machine-readable way. Instead of a human scrolling through a webpage looking for an image, a computer program can ask the site directly: “What files do you currently have uploaded?”

Those responses typically come in JSON, a lightweight data format that looks like a tidy list of labels and values (“title”: “wrapped_2025_image.png”). JSON is readable to humans and trivial for machines to parse, which is why nearly all APIs rely on it.

With that context in mind, WordPress media uploads are automatically exposed through its REST API, a built-in interface that returns information about uploaded files. By default, this media endpoint is publicly accessible:

https:///wp-json/wp/v2/media

This endpoint returns JSON containing the file name, the upload timestamp, alt text and caption, and the direct URL to the image.

Spotify’s Newsroom followed this default pattern. When the Wrapped graphics were uploaded into the media library, they immediately became accessible through the REST API, even though the accompanying press release had not yet been published.

How to practically use these signals to protect your positions

Of course, as a trader, you are not going to manually sift through dozens of web artifacts every day to protect a $50 position on Drake’s Spotify ranking. The good news is that you don’t have to.

Prediction-market traders increasingly employ tools that automate this detection process. What emerged organically in the Spotify case represents a generalizable strategy: identify the public endpoints a CMS exposes, automate periodic polling of those endpoints, detect the appearance of new assets prior to public announcements, and interpret these digital artifacts in relation to active prediction markets.

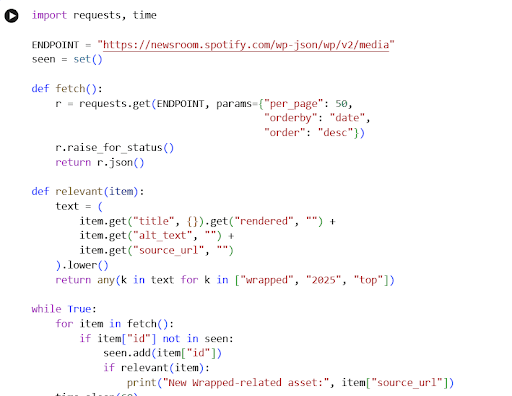

A minimal monitoring script requiring only basic familiarity with Python can operationalize this workflow. Below is a simplified example for WordPress environments:

How this monitoring code actually works

Conceptually, this script does three things.

First, it defines an endpoint (ENDPOINT) that points at the public WordPress media API. When fetch() is called, it sends an HTTP request asking for the 50 most recent media items, ordered by date. The API responds with JSON, which Python parses into a list of dictionaries. Each dictionary is one uploaded file with its metadata.

Second, it keeps track of what it has already seen. The seen set stores the IDs of media items the script has observed. Each time the script fetches the latest media, it loops through the items and checks whether the ID is new. This ensures you only react to fresh uploads, rather than spamming yourself with the same asset every minute.

Third, it applies a very simple relevance filter. The relevant( ) function looks at the title, the alt text, and the URL of each media item, converts them to lowercase, and checks whether any keyword you care about appears. Here, those keywords are “wrapped,” “2025,” and “top,” but in practice you would tailor these to the specific market: contract codes, names of nominees, tickers, or report titles.

The outer while True loop turns this into a monitor. Every 60 seconds, it hits the endpoint again, updates the seen set, and prints a message if something new and relevant appears. It is not exploiting a bug; it is automating exactly what a human could do manually by repeatedly loading the media page, only much faster and without fatigue.

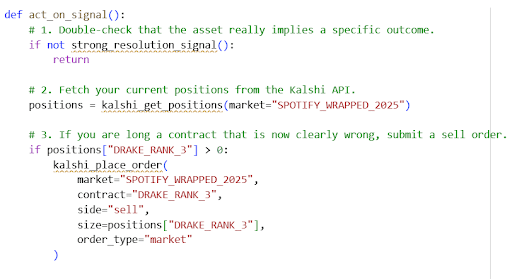

From signals to trades: Connecting monitoring to the Kalshi API

Detecting a leak is only half the story. For a prediction-market trader, the critical question is how quickly and safely you can turn that signal into an adjustment of your positions. Again we want to automate: If your position is small, you don’t want to be wasting time constantly checking on it. And if your position is big you want to be able to move before someone else crushes you.

In outline, a trader could:

- Define clear criteria for what counts as resolution evidence (for example, a media asset whose filename or alt text encodes the final ranking, or a PDF whose contents clearly match a contract’s resolution condition).

- Configure the monitoring script to send a notification or trigger whenever those criteria are met. That might be a terminal printout for a hobbyist or a webhook, email, or Slack message for a more serious setup.

- On confirmed evidence, submit an order through the Kalshi API that either:

- closes an exposed position (for example, selling out of a “Drake #3” “yes” contract), or

- opens a new position if the market has not yet moved and you are comfortable taking that risk.

At a basic level, the trading side might look like this in pseudocode:

In practice, Kalshi’s API requires authentication, careful handling of rate limits, and explicit order parameters. You would store your API keys in environment variables or a secure configuration file, not directly in the script. You might also choose to use limit orders instead of market orders to avoid crossing a very wide spread in thin conditions. The point is not this exact function, but the architecture.

Why this matters for prediction markets

A check of the Spotify Newsroom API today shows it has now been locked, preventing a repeat of this specific leak. But this type of early-asset exposure isn’t unique to Wrapped. Similar incidents have affected:

- Nobel Prize announcements

- Government economic reports

- Corporate earnings releases

They all share the same underlying pattern: digital assets are staged on a CMS before publication, and unless permissions are configured correctly (which they often aren’t), those assets can surface through public APIs before the official announcement.

Any market tied to a scheduled release has potential leak surfaces, and automated monitoring can help ensure you’re not caught on the wrong side of a sudden price collapse driven by information that was technically “public” the entire time. Don’t let your portfolio become someone else’s alpha.